By Nic Lindh on Monday, 30 November 2015

Almost as disgusting as the Paris attacks themselves were the responses, horrific Islamophobia and thought short-circuiting fear manifesting all over the I-don’t-know-just-do-something-anything spectrum. In other words, exactly what the assholes who committed the atrocity wanted.

Waiting in the wings was, of course, calls for an end to privacy so that mass surveillance will work better. It turns out the particular asswipes responsible for the Paris atrocity weren’t even using encryption—they did their planning in the clear and moved around under their own identities. But that doesn’t matter. In mass surveillance thought space, more surveillance is better and clearly if there had been more surveillance magic would have kept Paris from happening. QED.

Which is face palm territory. But these people were allowed their space in the media to push the idea that clearly a terrorist attack means we must have more surveillance to be safe.

This is an argument you can make: Terrorist and pedophiles are scary, so we shouldn’t have any privacy. I very much disagree, but sure, it’s an argument you can make. But recognizing most people don’t feel that’s a good trade off, the argument these days is that you, solid citizen, can still have your privacy, but the people who protect you will be able to surveil the pedophiles and the terrorists.

Which is either a bald-faced lie or industrial-strength disingenuousness.

Let’s break it down: The current state of the art in Internet privacy is public key encryption. Basically a person who wants privacy on the Internet—like you do when you punch your credit card number into Amazon—has a private key and a public key. Through an amazing amount of high-level math concocted by geniuses, only you and Amazon can read the content that goes between your computer and Amazon. And it happens every time you’re on a website that’s using encryption. It’s the foundation for all privacy on the Internet.

The only way for the good guys to be able to read the transaction that just happened between you and Amazon is for the encryption to be broken—if somebody planted an extra private key or built a backdoor into the encryption software you were using. The software has to be broken.

(There’s also traffic analysis to be concerned about. Even though an eavesdropper may not see your credit card, the fact that your computer and Amazon’s are talking is visible.)

It’s a complete fantasy that there’s some way to put in a way for the good guys to read what you wrote but nobody else. Because, again, the software has to be broken. And what happens when the encryption software is broken? Somebody else will find the way it’s broken and exploit it.

So now it’s not just you, Amazon, and Western state surveillance. It’s you, Amazon, Western state surveillance, and a crime syndicate reading your credit card information.

Breaking encryption is not just something good guys are interested in—there are plenty of terrible, repressive states out there that would just love to read everything citizens are writing so they can throw people into torture chambers. And there are criminals, like the ones who broke into Sony, who would just love to get any kind of information they can for blackmail purposes.

If the public key encryption system works like it should, they can’t. But if it’s broken on purpose so the good guys can get in, anybody else who figures out the flaw can also get in.

That’s where the magical thinking comes in: The sheer idea that there’s a way to break encryption in such a way that only the right authorities can exploit the flaw is ludicrous. If it’s broken, it’s broken.

As an analogy of the scale we’re dealing with, look at the iPhone. Apple locks its phones down so that you can only do certain things with it. For example, you can only purchase software from Apple’s App Store. You can’t download software from wherever you want.

Some people are really annoyed by this and figure out ways to jailbreak their phones. Jailbreaking means finding a flaw in the security of the phone to allow for a privilege escalation so you can do whatever you want. This means that every time somebody figures out a way to jailbreak the iPhone, what they’ve actually found is a flaw in the security of the phone.

There are always new jailbreaks. This is the most profitable company on the planet, employing some of the best computer engineers money can buy and they can’t prevent jailbreaks from happening.

Computer security is hard.

Jailbreaking iPhones is mostly low-stakes. (Mostly—obviously shady characters who want to surveil people are also extremely interested in ways to circumvent Apple’s security so they can load surveillance software.)

Imagine the lengths state actors and criminal syndicates are going to go to find the vulnerabilities deliberately put into encryption software to provide backdoors for Western governments. That’s a James Bond-level game and the resources put in play are unimaginable.

So let’s stop pretending there’s a way to break encryption that only the good guys will be able to access.

Vulnerabilities will be exploited.

If you’d like to learn more about encryption, The Code Book is a breezy, fun non-technical primer on the history of ciphers and codes. I highly recommend it.

Let’s talk about how fun it is to have a go-cart people mover.

Is there reason to upgrade from a 3 to a 5?

The Internet tells Nic to install Ubiquiti gear in his house, so he does, and now he has thoughts.

What I wish I’d known when I started podcasting.

Nic reports his experiences so far with voice computing from Amazon and Google and is a bit mystified at the reaction to Apple’s HomePod.

After a few weeks of using iPhone X I’m ready to join the congratulatory choir.

Nic is interested in smart homes. His contractor let him know how the wealthy are already using them.

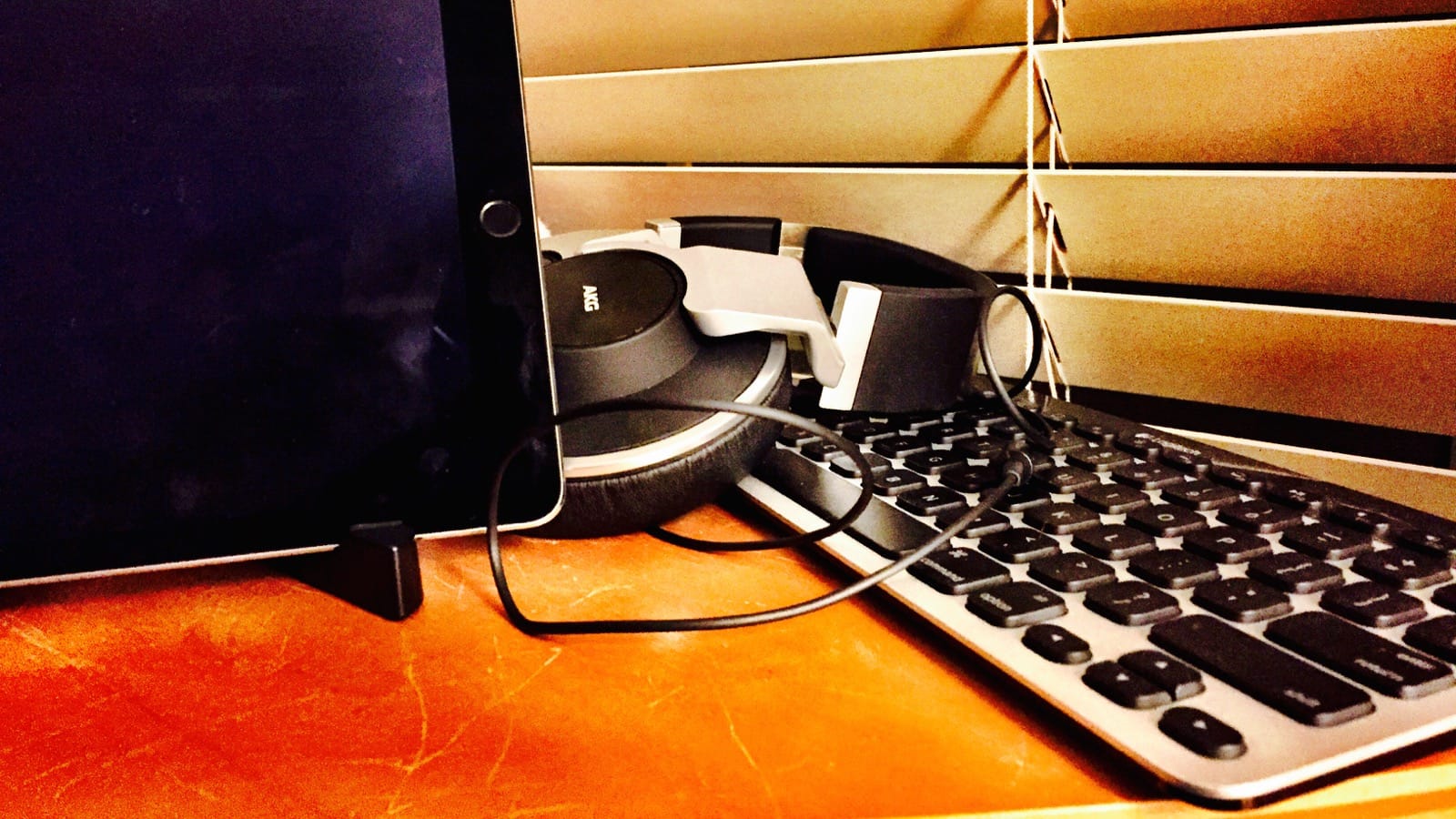

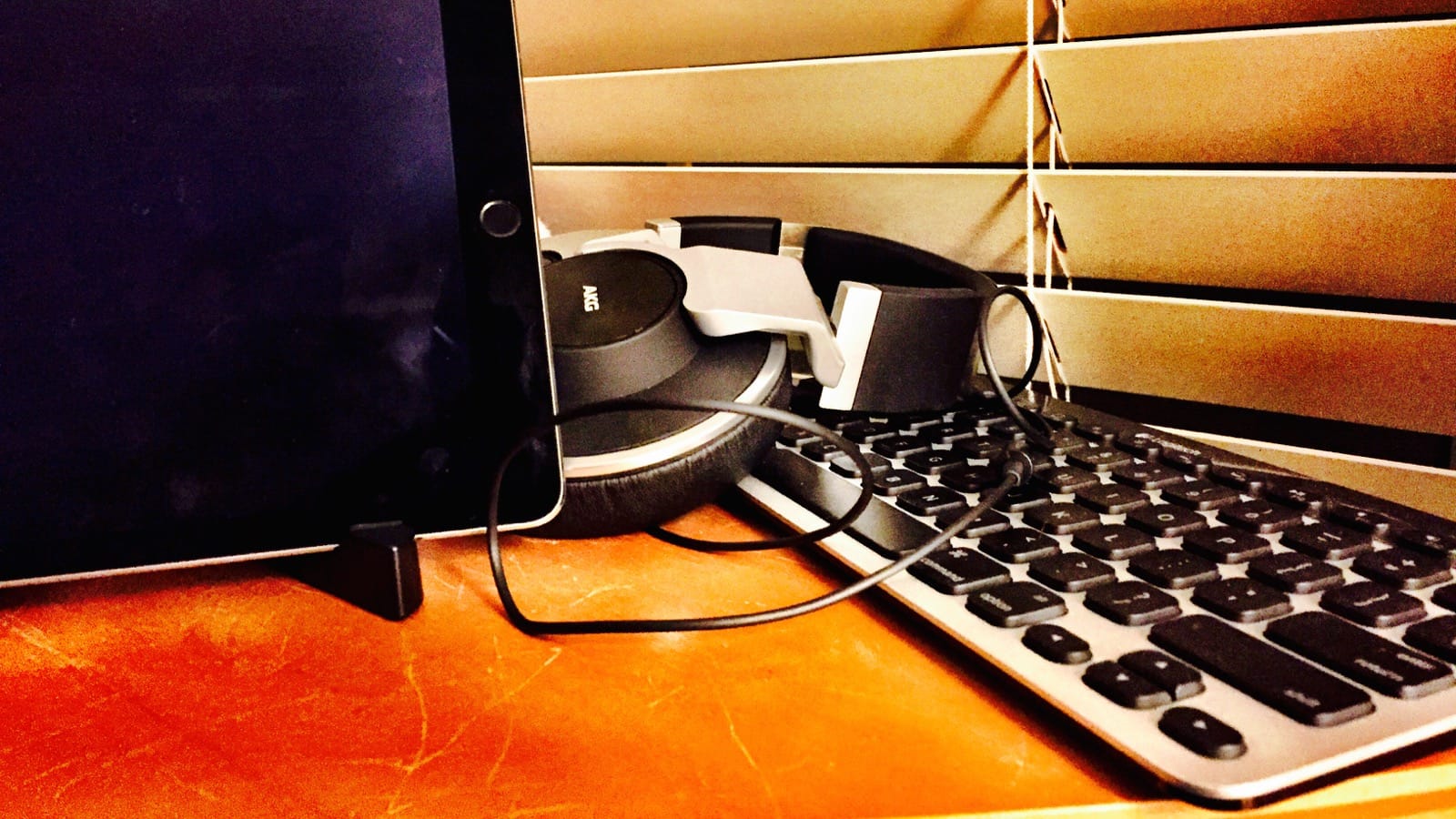

A concise guide to getting started with podcasting, including equipment, editing, mic technique and hosting.

Apple’s neglect of the pro market is causing a lot of gnashing of teeth in Apple-nerd circles, but it’s true to Apple’s vision.

There is unrest in the Mac community about Apple’s commitment to the platform. Some are turning their eyes to building a Hackintosh to get the kind of computer Apple doesn’t provide. Here’s what it’s like to run a Hackintosh.

Car nerds are dealing with some cognitive dissonance as car technology changes.

The Oasis is Amazon’s best e-ink reader to date, but it’s not good enough for the price.

Nic buys an Amazon Echo and is indubitably happy with the fantasy star ship in his head.

The problem isn’t ads. The problem is being stalked like an animal across the internet.

The DS416j is a nice NAS for light home use. Just don’t expect raw power.

The Core Dump is moving to GitHub Pages. This is a good thing, most likely.

Thoughts on Apple Watch after half a year of daily usage.

Things to consider when planning to build a site on a compressed time table.

Nic provides some basic not-too-paranoid tips for securing your digital life.

Installing Jekyll on an EC2 Amazon Linux AMI is easy. Here are the steps.

After wearing the watch for over a month, Nic has thoughts on its future. Spoiler: Depends on how you define success.

Turns out “it's just a big iPhone” is a stroke of genius.

Some technical terms still confuse people who should know better, like journalists.

How to host a static site on Amazon S3 with an apex domain without using Amazon’s Route 53.

People fear change, so new technology is used as as a faster version of the old. This makes technologists sad.

Nic provides a lesson plan for teaching total beginners HTML, CSS and JavaScript.

Nic loves his Pebble and looks forward to the Apple Watch, but realizes he’s in the minority.

Nic loves books, but he loves their content more.

Nic is worried about the fragile state of our technology and thinks you should be as well.

Nic tries to understand the WATCH. It doesn’t go well.

Nic thinks home integration could be Apple’s next major category. Read on to find out why.

Nic is frustrated with his Kindle and would love to see Apple make an e-ink reader.

Nic delves into the shady computer enthusiast underworld of the Hackintosh.

On the Mac’s 30th anniversary, Nic reminisces about his first.

The iPhone was announced Jan. 9, 2007. It now occupies a huge chunk of Nic’s life.

Nic is very impressed with the speed of the iPhone 5S and iPad Air.

Nic tells you how to find a theme for your new site.

Nic buys a Nexus 7 to test the Android waters.

All Nic wants for WWDC is sync that actually works

Nic is ecstatic about the backlighting on the Kindle Paperlight, but Amazon has made some strange design decisions and there’s a display hardware flaw.

Nic makes a new ebook and is dismayed by the sad state of ebook publishing.

One of the equivalences of haircut and clothing on the Internet is your email address.

Nic outlines some of the risks of ceding comments on news stories to Facebook.

Nic is bemused by the sturm und drang surrounding the iOS-ification of Mac OS X.

Web publishing used to require heavy-duty nerditry, but no longer.

Nic is creating an e-book. He shares what he’s learned so far.

Nic really digs e-book readers. No, seriously, he really digs them. And you should, too.

The future and now of personal computing is appliances. This post parses why you shouldn’t worry about it.